Who decides what can and can't be shared on social platforms? Mark Zuckerberg? Elon Musk? Or the users themselves?

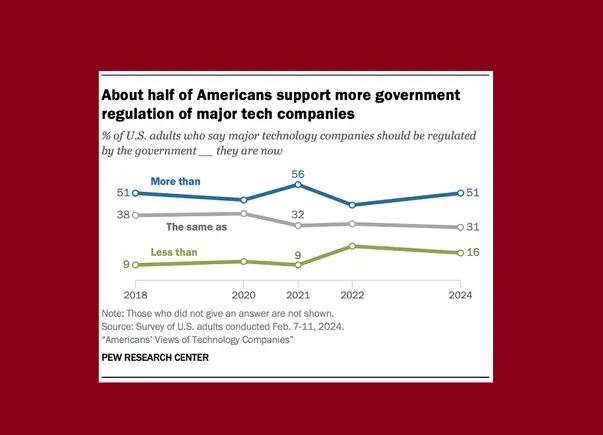

A new survey from Pew Research finds that just under half of Americans believe social platforms should be regulated by the government.

As the chart shows, 51% of Americans believe social platforms should be regulated by an official government-appointed body, down from 56% who supported the same in 2021.

It was on the heels of the 2020 election and the Capitol riots, and in such a situation, it was only natural that concerns were raised about the impact social platforms could have on political speech.

But now, with new elections looming in the U.S., those concerns appear to have eased, though most people still believe the government should do more to police content on social apps.

The issue of social media regulation is complex: while it's not ideal for executives at mass communication platforms to dictate what can and can't be shared on each app, regional variations and rules also exist, making a universal content approach difficult.

Meta has long advocated for increased government regulation of social media, which would take pressure off its own team to consider important issues around speech. The coronavirus pandemic has intensified debate on that topic, while the use of social media by political candidates and the suppression of dissent in certain places have also put platform decision-makers in a difficult position about how to act.

In reality, Meta, X, and all the platforms would rather not police what users say at all, but advertiser expectations and government regulations sometimes force them to take action.

But the reality is, this decision shouldn't be decided by a few suited people in a conference room debating the merits of any particular statement.

This is something Meta has tried to highlight with its Oversight Board project, which appoints a team of experts to pursue Meta's moderation decisions following challenges from users.

The problem is that, while the group operates independently, it is funded by Meta, which many see as somewhat biased, but government-funded moderation groups would be similarly accused of being dependent on the current administration, and while this would reduce the pressure on platforms to make decisions, it would not lessen scrutiny of this element.

Perhaps Elon Musk is moving in the right direction with his focus on crowdsourced moderation, with Community Notes playing a bigger role in X's process. This allows people to decide what is right and what is wrong, what should be allowed and what should be challenged, and there is evidence that Community Notes has had a positive impact on reducing the spread of misinformation within the app.

At the same time, however, Community Notes currently cannot scale to the level necessary to address all content concerns, and Notes are added too slowly to stop the spread of certain claims before they have an impact.

Perhaps another solution would be to up-vote and down-vote every post, speeding up the same process and stopping the spread of misinformation faster.

But it can also be manipulated. And, again, authoritarian governments are already very active in suppressing speech they don't like. Giving them the power to make such decisions isn't going to improve the process.

This is why it's such a complex issue, and while the public clearly wants more government oversight, it's also understandable why governments might want to distance themselves from such oversight.

But they must be held accountable: if regulators are enforcing rules that acknowledge the power and influence of social platforms, they should also consider enacting moderation standards in the same vein.

All questions about what is and isn't allowed would then be left to elected officials, and your support or opposition to it would be shared at the ballot box.

This is by no means a perfect solution, but it seems better than many of the current approaches.